Building Enterprise-Ready Secure AI Agents with Skyflow

Discover how Skyflow helps build secure AI agents that protect sensitive data and ensure compliance in the modern AI ecosystem.

From managing sensitive patient records in healthcare to safeguarding personal financial data, and even protecting intellectual property in enterprises, AI agents are increasingly trusted with sensitive information that demands advanced security and privacy measures.

So, how can businesses create AI systems that prioritize user privacy while staying compliant? This article outlines the key principles, technologies, and best practices for building AI agents that safeguard privacy by design.

What Are AI Agents, and Where Do They Get Used?

An AI agent is an autonomous or semi-autonomous software program designed to perform tasks or make decisions on a user's or system's behalf. Unlike traditional tools, AI agents go beyond static responses by leveraging advanced reasoning, context awareness, and decision-making capabilities to act autonomously.

Powered by large language models (LLMs), modern AI agents combine natural language understanding with decision-making and task execution capabilities. Unlike LLMs that only generate answers or content based on input, AI agents take this further by querying databases, analyzing results, and delivering actionable insights autonomously. With minimal human intervention, this enables businesses to automate intricate workflows, from customer support to secure data processing.

At a high level, agents can be categorized into three primary types: Reactive agents that respond to inputs without memory, Proactive agents that take initiative using context and reasoning, and Interactive agents that collaborate with systems to process data and deliver tailored results securely.

From a real-world perspective, these agent types can often be simplified into two broader categories:

- Conversational Agents, which focus on natural language interactions with users.

- Workflow Agents, which manage and automate tasks or processes.

This abstraction helps to map the underlying technology to practical use cases more effectively.

What Are the Essential Building Blocks of an AI Agent?

Earlier agents were built using rule-based systems or traditional AI techniques, while today’s agents leverage LLMs to provide more dynamic, context-aware, and intelligent interactions. An AI Agent today has three fundamental components:

- Model

The model is the agent's core, responsible for understanding inputs and generating outputs. It can be fine-tuned or adapted for specific applications, ensuring the agent is optimized for targeted use cases. The choice of model (e.g., OpenAI's GPT, Meta's LLaMA, or other foundational models) and the data used to train and update it significantly influence the agent's accuracy, reliability, and ethical behavior.

- Tools and Action Execution

Tools enable agents to connect with external systems, gather information, or execute tasks outside their initial training data. Examples include:

- Retrieval-Augmented Generation (RAG): Accessing dynamic knowledge bases or documents.

- APIs: Integrating with services like payment processors, CRM platforms, or any external system.

- Web Searches: Gathering real-time information.

- Databases/Calculators: Performing computations or querying structured data.

The inclusion of tools enhances the agent's real-world applicability but also introduces risks as it gives the agents access to sensitive data. Thus, secure handling of sensitive data accessed through these tools is paramount to prevent exposure, misuse, or compliance violations.

- Memory and Reasoning Engine

The memory and reasoning engine governs the agent's ability to retain context and make informed decisions. It synthesizes prior interactions and outputs data to ensure coherent and purposeful behavior. Key aspects include:

- Reasoning Frameworks: Techniques like Chain-of-Thought (CoT) or ReAct (Reasoning + Acting) enable structured problem-solving.

- Memory Systems: Persistent or ephemeral memory allows the agent to adapt and personalize over time, enhancing user experiences.

These components are critical for ensuring the agents’ actions align with the goals and adapt dynamically to complex tasks.

However, there’s one piece we have not discussed here: AI agents often require access to sensitive data to perform their tasks effectively. For instance, a customer service AI agent may access transaction histories to resolve disputes, or a healthcare scheduling agent might need medical records to manage appointments accurately. This level of access introduces risks, including data privacy, security, and regulatory compliance challenges.

What Are Privacy-Preserving AI Agents, and Why Do They Matter?

AI agents heavily rely on data, but this reliance introduces significant concerns around privacy and security, particularly when sensitive data is involved. Without robust safeguards, sensitive information is vulnerable, putting organizations at risk of regulatory violations and reputational harm. Privacy-preserving AI agents address these challenges by implementing tools and frameworks that protect data while ensuring compliance with regulations like GDPR, CCPA, and HIPAA.

To address these challenges, adopting a privacy-first architecture to minimize data exposure and protect sensitive information throughout its lifecycle is critical. This would entail applying principles such as:

- Data minimization: Collecting and processing only the data necessary for specific tasks.

- Strict access controls: Restricting data access to authorized users and systems.

- Encryption: Safeguarding data both at rest and in transit.

- Anonymization: Ensuring sensitive information cannot be linked back to individuals.

This architecture scales easily to handle more complex environments, such as multi-modal systems that simultaneously process varied data types (text, images, or speech), and multi-agent systems, where multiple agents interact securely to share data. In both cases, privacy-preserving agents ensure that data is handled securely across various agents and modalities, reducing the risk of exposure while enhancing performance.

Privacy-preserving AI agents mitigate the risks of data breaches and build trust among users, align with regulatory requirements, and promote responsible AI innovation. As AI becomes an integral part of industries worldwide, embracing privacy-first practices is no longer optional; it's essential.

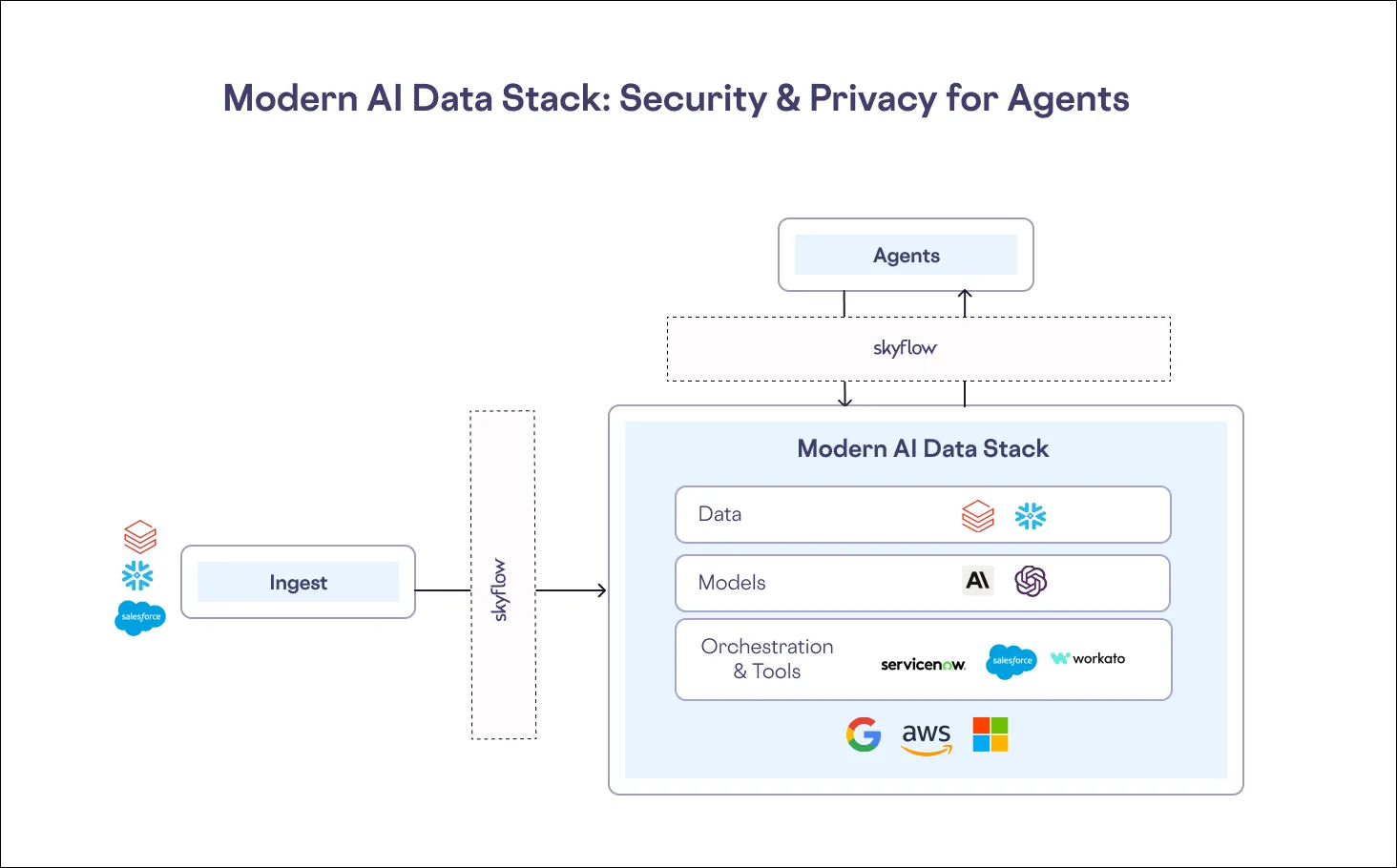

How Skyflow Enables Privacy-Preserving AI Agents

Building privacy-preserving agents requires a robust privacy-first approach. Skyflow’s Data Privacy Vault is designed to ensure security and privacy at every step of the agent lifecycle: The Model, the data, and the tools that enable agents to act on the user's behalf.

While building a privacy-preserving AI agent, Skyflow enables the following:

- Protecting Sensitive Information in Model Training and Fine-Tuning

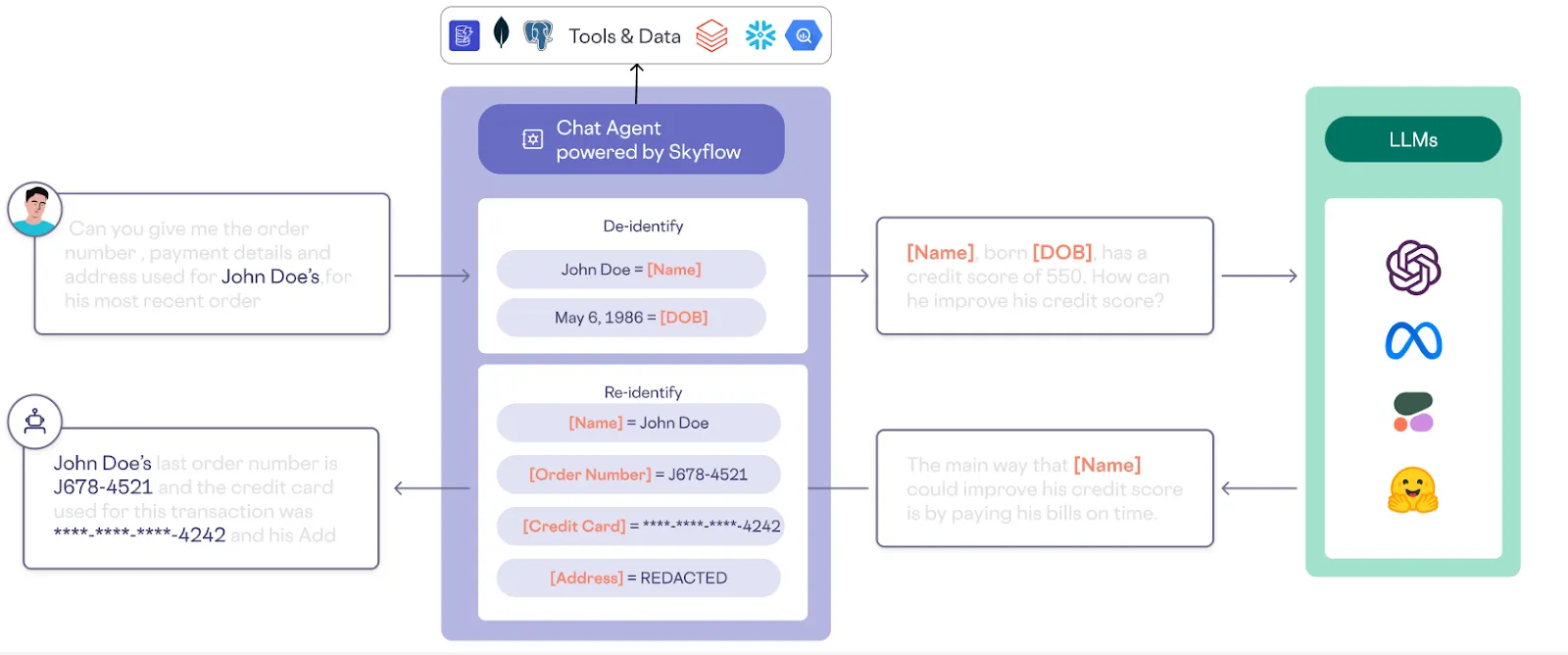

Skyflow ensures that sensitive data remains protected throughout the model training and fine-tuning processes. By encrypting, tokenizing, or de-identifying sensitive information, Skyflow prevents private data from being inadvertently embedded into the model, reducing the risk of exposure during future use. - Securing External Tool Interactions

AI agents rely on external tools, APIs, or extensions for data retrieval and processing. Skyflow acts as a gatekeeper, ensuring that these tools only access de-identified or masked data and preventing any unauthorized exposure of sensitive information. - Granular Access Control and Data Governance

Skyflow enforces fine-grained access controls to ensure agents can only access data they are explicitly authorized to view. This includes applying advanced data masking, redaction, or tokenization techniques to limit exposure to sensitive information while maintaining the functionality needed to complete tasks effectively.

Skyflow’s platform integrates effortlessly with popular agent frameworks such as LangGraph, LlamaIndex, AutoGen, Vertex AI, Mosaic ML, CreoAI, and Swarms. This allows businesses to incorporate privacy-preserving capabilities without disrupting existing workflows or requiring custom development.

Once an agent is built and used within a business workflow, it is critical to make sure that sensitive information is not compromised while we monitor the agent's usage and compliance.

- End-to-End Prompt and Response Management

To prevent sensitive information from being exposed during agent interactions, Skyflow uses strict measures to sanitize prompts and responses. By filtering sensitive details from user inputs and redacting private data in agent outputs, Skyflow ensures privacy is maintained at every touchpoint. - Comprehensive Data Auditing and Compliance

Skyflow provides full auditability of data usage within the agent’s workflows. Detailed logs track every data access, tool interaction, and information flow, ensuring accountability and simplifying compliance with privacy regulations like GDPR, CCPA, and HIPAA.

Skyflow goes beyond traditional data security solutions by offering a complete security and privacy infrastructure optimized for AI workflows. Its modular architecture means you can tailor privacy features to your specific needs – whether it’s training domain-specific models, building customer-facing agents, or scaling enterprise-grade AI solutions.

By embedding security and privacy at the core of your AI systems, Skyflow empowers you to innovate responsibly, build customer trust, and maintain compliance, all without compromising the performance or functionality of your agents.

Tying It All Together

Incorporating privacy-preserving techniques isn’t just a compliance exercise – it’s a strategic advantage. As consumers become more aware of data privacy, organizations prioritizing privacy in their AI agents can differentiate themselves, building trust and loyalty. For sectors like healthcare, finance, and education, where data privacy is non-negotiable, privacy-preserving AI agents enhance user confidence and align with industry standards. Additionally, as AI agents become more advanced and integrated into sensitive workflows, the risk of data breaches will inevitably rise. Poorly secured tools, weak access controls, or mishandling sensitive data can lead to significant trust and compliance challenges.

Building agents with a privacy-first architecture – where encryption, access controls, and auditing are embedded from the start is essential to mitigate these risks. Organizations that prioritize privacy will protect sensitive data and ensure trust and long-term success in an AI-driven world.